Model Context Protocol (MCP): Open Standard for AI Integration

The Model Context Protocol (MCP) is an open standard enabling AI systems to connect with diverse data sources, tools, and services, eliminating custom integrations for seamless interaction.

🚀 Open Standard by Anthropic

What is MCP?

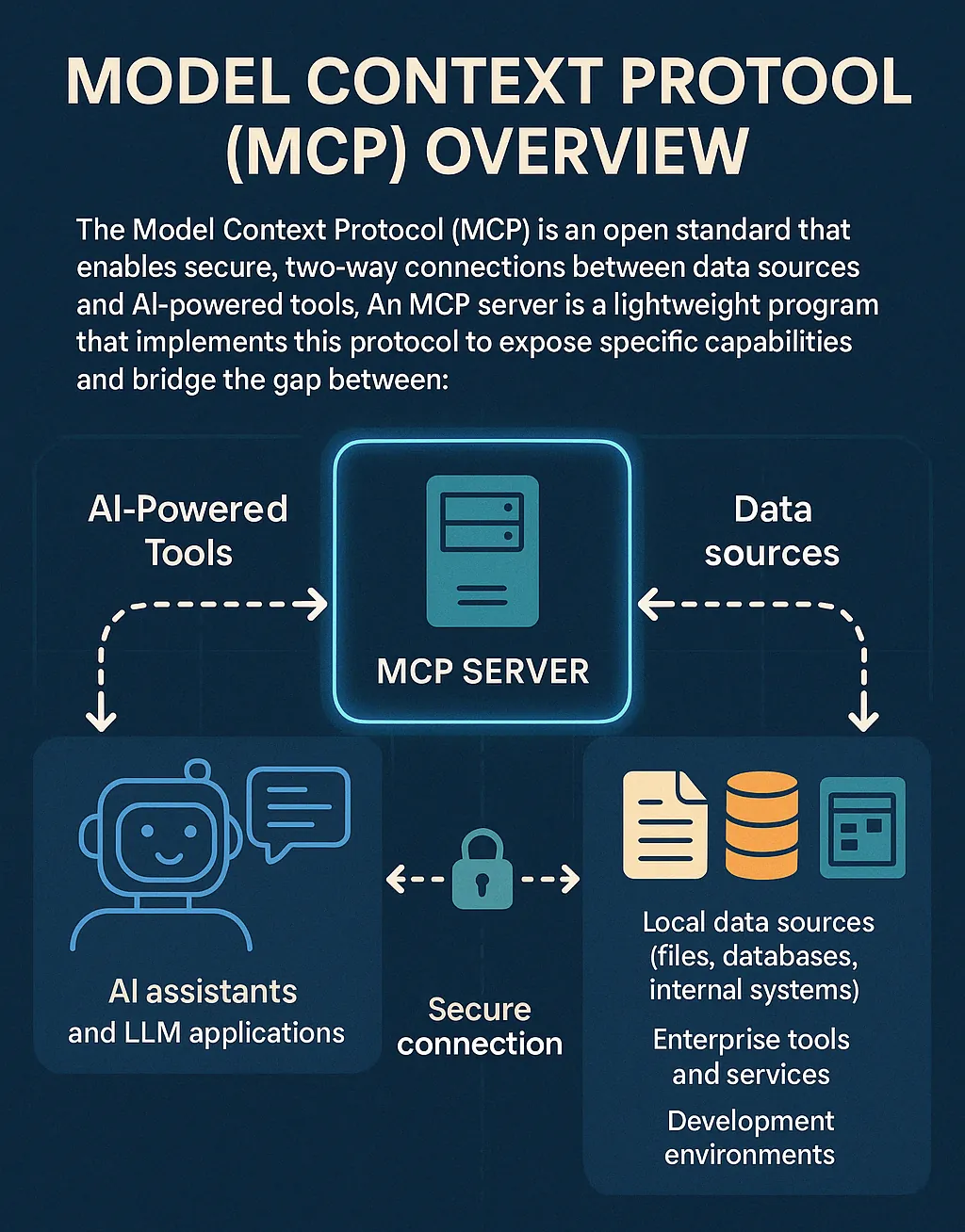

The Model Context Protocol (MCP) is an open standard that enables connections between AI systems and external services. Think of MCP servers as "apps" for AI — they extend the functionality of AI systems in much the same way mobile apps extend the capabilities of smartphones.

By providing a unified, standardized interface, MCP allows AI models to seamlessly access, interact with, and act upon information — eliminating the need for custom integrations for each data source.

MCP architecture diagram

MCP architecture diagram

Why MCP Matters

Rapid Integration

Connect to dozens of services using a single, standardized protocol instead of building custom integrations

Simplified Development

Abstract away the complexities of different APIs and focus on building great AI experiences

Ecosystem Growth

Leverage community-built MCP servers and contribute your own to help others

Modular Architecture

Mix and match servers to create powerful combinations of capabilities

Key Capabilities

Resources

Access structured data from files, databases, APIs, and more

- • Code repositories

- • Documents & wikis

- • Database queries

- • API responses

Prompts

Reusable templates for common AI workflows and patterns

- • Code review templates

- • Documentation formats

- • Analysis patterns

- • Custom workflows

Tools

Executable functions AI can invoke to take actions

- • Database operations

- • API calls

- • File operations

- • Complex calculations

How It Works

Choose Your Servers

Select MCP servers from the ecosystem that connect to the services you need (databases, APIs, file systems, etc.)

Configure Your Host

Set up your AI application (Claude Desktop, VS Code, Cursor, etc.) to connect to these MCP servers

Grant Permissions

Authorize which capabilities the AI can access — you maintain full control over data and actions

Use Enhanced AI

Your AI assistant can now access real data, use actual tools, and perform concrete actions on your behalf

Chain Multiple Servers

Combine capabilities from multiple servers to accomplish complex, multi-step tasks automatically

Explore MCP Servers by Category

Browse our comprehensive collection of MCP servers organized by use case:

Databases & Storage

PostgreSQL, MongoDB, Redis, S3, and more

Development & DevOps

Git, Docker, CI/CD, testing tools

Business & Productivity

Notion, Slack, Linear, Zendesk

AI & ML Tools

Perplexity, Ollama, memory systems

Search & Retrieval

Web search, document retrieval, APIs

Integration & Automation

Zapier, browser automation, workflows

Blockchain & Web3

Ethereum, wallets, blockchain platforms

Content & Media

YouTube, TikTok, X/Twitter, Blender

Analytics & Data

PostHog, analytics platforms, metrics

Specialized Tools

Crypto, filesystems, text-to-speech

Gaming & Entertainment

Minecraft, game integrations

Learn More

Related Articles

X (Twitter) MCP Server

X (Twitter) MCP servers enable AI models to interact with X platforms, providing capabilities for tweet management, account operations, list organization, and content search.

Google Analytics MCP Server: AI-Powered Analytics for GA4

Google Analytics MCP server enables AI models to interact with Google Analytics 4 for reports, user behavior analysis, event tracking, and real-time data access.

Sequential Thinking MCP Server: AI Step-by-Step Problem Solving

Sequential Thinking MCP servers enable AI models to perform structured, step-by-step problem-solving with support for thought revision, branching reasoning, and dynamic context management.